In recent times, we often hear more and more about prompt engineering. But what exactly is this technique? When and how can we use it?

Prompt engineering is one of those techniques that is gaining increasing attention. Not surprisingly, prompt engineering is closely linked to the technology that is increasingly transforming our lives: large language models. Technologies like Gemini and ChatGPT are being used more widely by the general public, partly due to their integration into everyday tools (such as search engines like Bing or virtual assistants on our smartphones, like the iPhone 16).

However, when you have such a powerful tool at your disposal, it’s crucial to know how to use it effectively. Prompt engineering is a technique that allows us to shape a large language model to meet our needs.

Google defines it as “the art and the science of designing prompts to guide AI models, towards generating the desired responses”. But what is a prompt?

The prompt is essentially the instructions we give to a large language model, expressed in written or spoken language. Since the LLM is not human, we may need to follow certain “verbal” guidelines to ensure it understands us correctly. After all, the LLM adheres to a fundamental principle of computer and data science:

“Garbage in, garbage out."

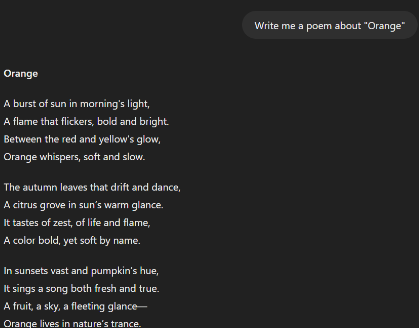

If we don’t explain ourselves properly, the LLM may not understand us. Even worse, if we aren’t aware of the LLM’s limitations, it might give us incorrect answers or generate misleading content. In this example, are we asking a poem about “Orange”. Is it the company? Is it the fruit? Is it the color? Is it the French city? The LLM can’t read our mind (not yet).

LLM can handle an impressive range of tasks, even without examples. Whether it’s generating a story, answering a question, or translating a phrase, LLMs are quite capable of figuring things out on their own.

LLMs are surprisingly good at zero-shot tasks because they’ve been trained on massive datasets, allowing them to draw on general patterns. However, even though they can manage a wide variety of requests, zero-shot prompting sometimes lacks precision or clarity—especially if the task is complex or specific.

Now, imagine you’re organizing a party for your Moroccan friend. You ask your favorite LLM for a list of the five most popular Moroccan dishes. The model responds with a list of traditional dishes like couscous and tagine. It’s a good start, but something’s missing. You were hoping for more details, like ingredients, difficulty levels, and whether the dishes are vegan, vegetarian, or otherwise.

In this case, zero-shot prompting gives you a general response, but it lacks the depth you need. This is where few-shot prompting comes in handy—by giving the model a few examples of the type of answer you’re looking for, you can guide it toward more detailed and relevant output.

Please list the five most popular Moroccan dishes, including ingredients, difficulty level, and whether each dish is vegan or vegetarian.

Here's an example format:

Couscous

Ingredients: Semolina, vegetables, lamb or chicken

Difficulty: Moderate

Type: Can be made vegetarian or with meat

By providing a few examples upfront, the LLM sticks to the specific structure you’ve provided, minimizing hallucinations and generating more relevant, detailed, and accurate results.

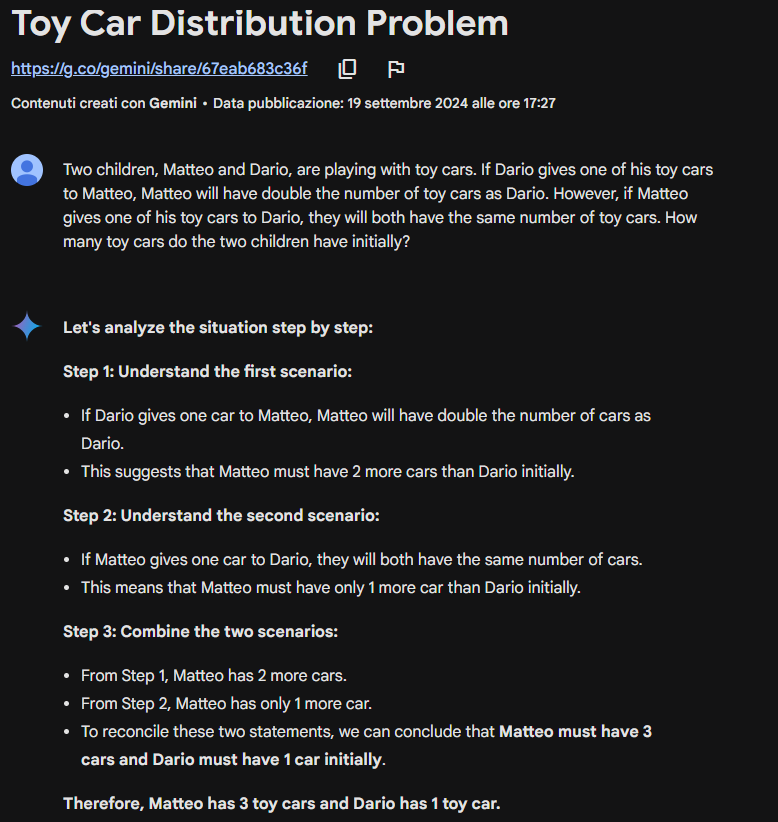

Sometimes the 0-shots and the n-shots techniques may not be enough. Sometimes our model may need more time to “think” and elaborate the correct answer rather than generate wrong conclusions. It is often the case of mathematical problems.

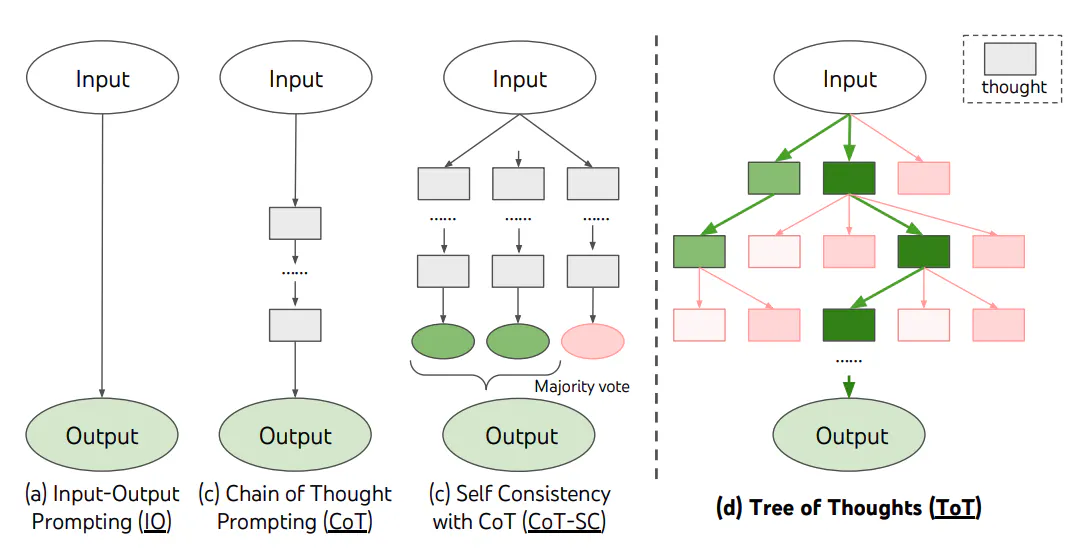

The chain of thought technique encourages the model to break down its thought process and work through each stage of reasoning rather than producing an answer in one step. This structured approach significantly improves the model’s ability to arrive at the correct solution, especially for problems requiring deeper understanding or logical steps.

Additionally, the chain of thought technique can be applied in different ways, much like other prompting methods. It has both zero-shot and few-shot versions:

-In the zero-shot version, the model is simply invited to think step-by-step, but without being given specific examples

-In the few-shot version, the model is provided with a few examples of how to break down problems into steps. By observing these examples, the model can better understand how to structure its reasoning, increasing accuracy and reliability in more complex or unfamiliar tasks.

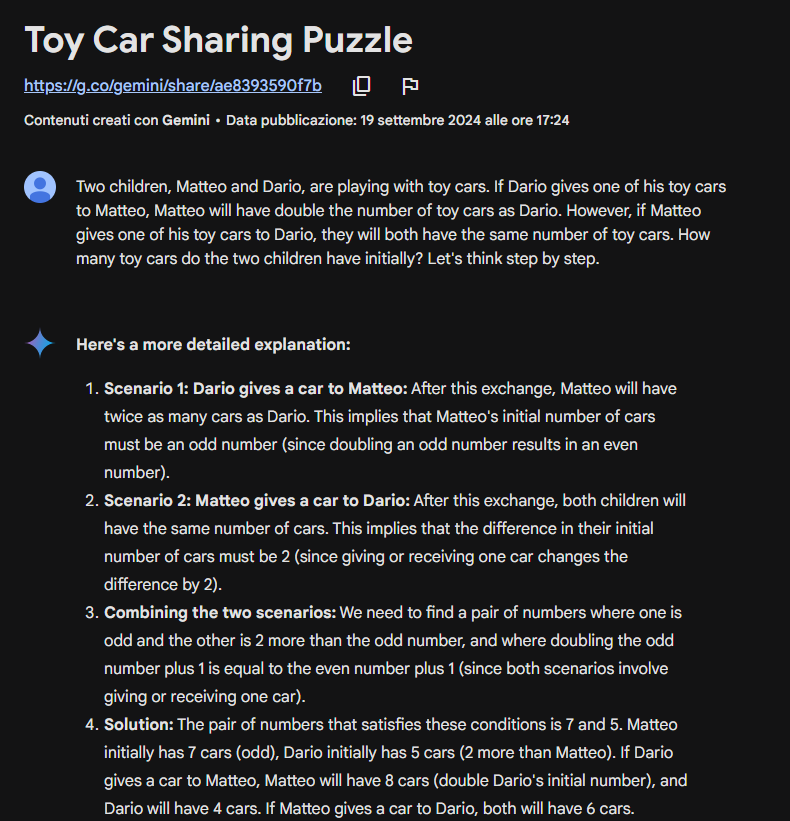

In this example, zero-shot Chain of Thought was enough for the model to give a correct answer. In same cases, neither CoT may be good enough…

Indeed, while CoT encourages the model to think step by step, there is a potential issue: since each step is based on the previous one, the model can sometimes lose sight of the bigger picture.

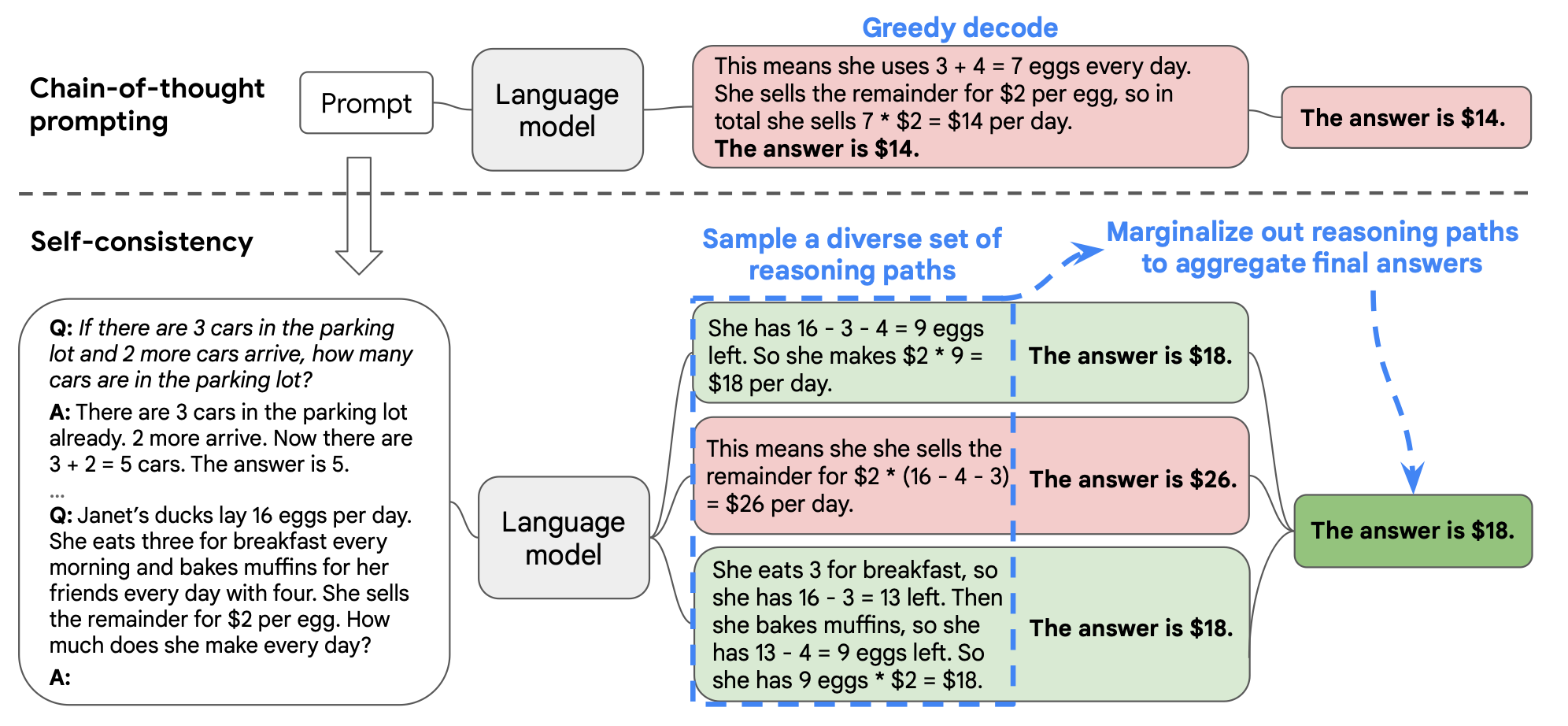

This issue is known as Naive Greedy Decoding, where the model makes decisions locally, focusing too narrowly on the immediate step without considering the broader context, which can lead to incoherent or incorrect answers.

Self-Consistency offers a solution to this problem. Instead of relying on a single pass of reasoning (which may be flawed due to over-reliance on prior steps), Self-Consistency generates multiple independent reasoning paths and then selects the most consistent or common answer from them.

Self-Consistency simulates human thinking, which involves the ability to evaluate different options before making a decision. In a way, it can also be seen as a form of collective intelligence, as only the most common or consistent answer is considered correct.

The final step of aggregating and selecting the correct answer can be performed using a standard algorithm or by an LLM itself. This last approach is known as Universal Self-Consistency.

The Tree of Thoughts approach can be seen as a hybrid method that combines the sequential reasoning of Chain of Thought with the parallel processing of Self-Consistency. This technique allows the model to explore multiple branches of reasoning simultaneously while maintaining a structured progression through each line of thought.

Experiments show that ToT significantly improves performance on tasks requiring planning or search, such as the Game of 24, Creative Writing, and Mini Crosswords.

For example, according to the research paper “Tree of Thoughts: Deliberate Problem Solving with Large Language Models,” while GPT-4 with Chain of Thought prompting only solved 4% of tasks, the Tree of Thoughts method achieved an impressive success rate of 74%.

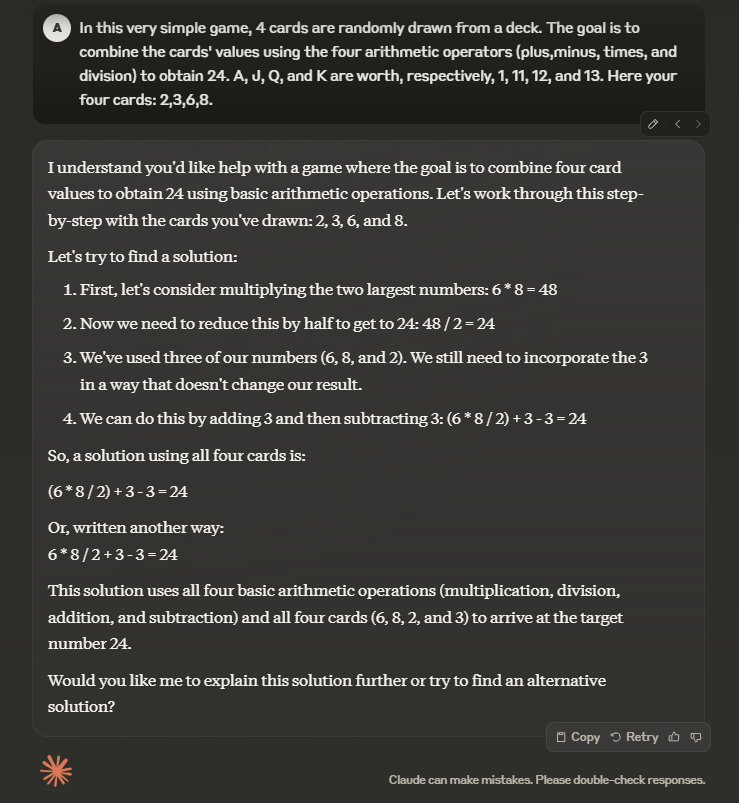

While zero-shot prompting couldn’t yield the right solution, we encourage you to try more complex Tree of Thoughts prompting. Here’s an example of how to structure your prompt:

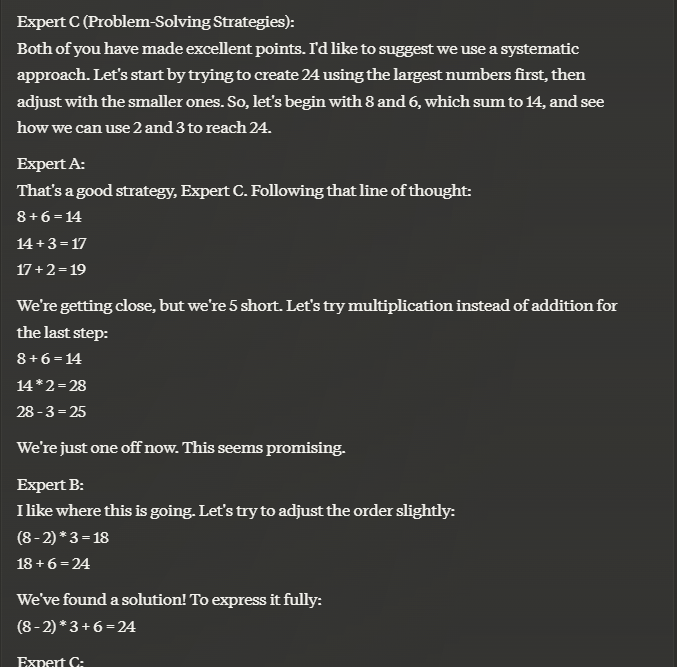

Imagine three highly intelligent experts working together to answer a question.

They will follow a tree of thoughts approach, where each expert shares their thought process step by step.

They will consider the input from others, refine their thoughts, and build upon the group's collective knowledge.

If an expert realizes their thought is incorrect, they will acknowledge it and withdraw from the discussion.

Continue this process until a definitive answer is reached.

The question is...

In this very simple game, 4 cards are randomly drawn from a deck.

The goal is to combine the cards' values using the four arithmetic operators (plus,minus, times, and division) to obtain 24. A, J, Q, and K are worth, respectively, 1, 11, 12, and 13.

Here your four cards: 2,3,6,8

In our example, using the given prompt, Claude 3.5 began by adopting three different personalities (I know it may sound weird) before arriving at the correct answer.

Last but not least in importance is the persona pattern. I saved this for last because it’s the most entertaining! The first time I used this pattern, I had ChatGPT role-play as Jesse Pinkman from Breaking Bad, and it was absolutely hilarious.

The persona pattern is a technique used to assign a specific role or personality to guide the model’s responses. You can prompt the model to take on various personas, such as:

This approach helps tailor the model’s responses to be more relevant and aligned with the desired tone or type of information, enhancing the overall interaction and making it more engaging.

In our case, we experimented with this approach—discover the results for yourself:

An historical figure persona example

In summary, prompt engineering encompasses various techniques to enhance interactions with large language models. From zero-shot and few-shot prompting to Chain of Thought, Self-Consistency, Tree of Thoughts, and the persona pattern, each method offers unique advantages for generating relevant and accurate responses.

As LLMs continue to evolve and become more powerful, there may be instances where complex prompts are unnecessary. However, understanding and leveraging these techniques can significantly enhance the quality of your interactions, making them more engaging and effective.